据外媒报道,现在,社交媒体平台成为了大部分人获取新闻的来源,而这常常会伴随“过滤器泡沫”现象的出现–也就是说,如果用户大部分获取的新闻内容都来自好友,那么他看到的信息是否会偏向某一种特定意识形态呢?根据Facebok最新的调查(2014年7月-2015年1月)显示,这种联系并不存在。日前,该家公司在《科学》上发表了一篇调查报告。

报告指出,大部分人都有持有不同政治立场的好友,也就是说,这样的新闻获取方式反倒会带来信息的多样性。

Facebook称,虽然News Feed推送的大部分内容都是跟用户的意识形态相一致,但其好友的新闻阅读内容选择和点击在内容排名中更具影响力。据统计,Facebook用户23%的好友都持有与其不同的立场,而其分享的29.5%内容分享也都跟他本人观点不同。其实早在2012年的报告中,Facebook就提出了用户常常会分享与其个人立场不同的内容。

不过Facebook并未在报告中比较用户从其渠道获取新闻得到平衡观点跟从传统媒体获取新闻得到平衡观点的不同,但它确实证明了Facebook并不会让用户得到观点单边倒的新闻。

全英文如下:

As people increasingly turn to social networks for news and civic information, questions have been raised about whether this practice leads to the creation of “echo chambers,” in which people are exposed only to information from like-minded individuals [2]. Other speculation has focused on whether algorithms used to rank search results and social media posts could create “filter bubbles,” in which only ideologically appealing content is surfaced [3].

Research we have conducted to date, however, runs counter to this picture. A previous 2012 research paper concluded that much of the information we are exposed to and share comes from weak ties: those friends we interact with less often and are more likely to be dissimilar to us than our close friends [4]. Separate research suggests that individuals are more likely to engage with content contrary to their own views when it is presented along with social information [5].

Our latest research, released today in Science, quantifies, for the first time, exactly how much individuals could be and are exposed to ideologically diverse news and information in social media [1].

We found that people have friends who claim an opposing political ideology, and that the content in peoples’ News Feeds reflect those diverse views. While News Feed surfaces content that is slightly more aligned with an individual’s own ideology (based on that person’s actions on Facebook), who they friend and what content they click on are more consequential than the News Feed ranking in terms of how much diverse content they encounter.

Specifically, we find that among those who self-report a liberal or conservative affiliation,

- On average, 23 percent of people’s friends claim an opposing political ideology.

- Of the hard news content that people’s friends share, 29.5 percent of it cuts across ideological lines.

- When it comes to what people see in the News Feed, 28.5 percent of the hard news encountered cuts across ideological lines, on average.

- 24.9 percent of the hard news content that people actually clicked on was cross-cutting.

Sharing news on Facebook

During the six months between July 2014 and January 2015, more than 7 million distinct Web links (URLs) were shared by people on Facebook in the United States. We were interested in learning how much people were exposed to “hard news” — stories about politics, world affairs, and the economy, rather than “soft news” — stories about entertainment, celebrities, and sports — and whether this information was aligned primarily with liberal or conservative audiences. To do this, we trained a support vector machine classifier which uses the first few words of articles linked for each URL shared on Facebook. This allowed us to identify more than 226,000 unique hard news articles that had been shared at least 100 times.

Next, we characterized the content as either conservative or liberal. Nine percent of Facebook users in the United States self-report their political affiliation on their profiles. We mapped the most common affiliations to a five-point scale ranging from -2 (very liberal) to 2 (very conservative). By averaging the affiliations of those who shared each article, we could measure the ideological “alignment” of each story. To be clear, this score is a measure of the *ideological alignment of the audience* *who shares an article*, and is not a measure of political bias or slant of the article. This calculation is described in the illustration below.

Illustration of how ideological alignment for content is measured. For each shared item, we average the political affiliation of individuals who share. For example, in the left most example above, the article was shared by five people, three whom identified themselves as liberals, one as a moderate, and one as conservative, producing an average of -2/5.

Illustration of how ideological alignment for content is measured. For each shared item, we average the political affiliation of individuals who share. For example, in the left most example above, the article was shared by five people, three whom identified themselves as liberals, one as a moderate, and one as conservative, producing an average of -2/5.

When we average this measure for every story from a particular website domain, we can see key differences in well-known ideologically-aligned media sources: FoxNews.com is aligned with conservatives (As = +.80), while HuffingtonPost.com is aligned with liberals (As = -.65). There was substantial polarization among hard news shared on Facebook, with the most frequently shared links clearly aligned with largely liberal or conservative populations, as shown below.

This figure shows that most links to particular “hard news” articles are shared either primarily by liberals (alignment score close to -1) or by conservatives (alignment score close to +1) but rarely by both equally.

This figure shows that most links to particular “hard news” articles are shared either primarily by liberals (alignment score close to -1) or by conservatives (alignment score close to +1) but rarely by both equally.

Using the methods described above, we turned toward measuring the extent to which people could be, and are exposed to ideologically diverse information on Facebook.

Network structure and ideology

Homophily, the tendency for similar individuals to associate with each other (“birds of a feather flock together,”) is a robust social phenomenon. Friends are more likely to be similar in age, educational attainment, occupation, and geography. It is not surprising to find that the same holds true for political affiliation on Facebook. We can see how liberals and conservatives tend to connect to people with similar political affiliations based on sample ego-networks depicted in the visualizations below.

Example social networks for a liberal, a moderate, and a conservative. Points are individuals’ friends, and lines designate friendships between them.

Example social networks for a liberal, a moderate, and a conservative. Points are individuals’ friends, and lines designate friendships between them.

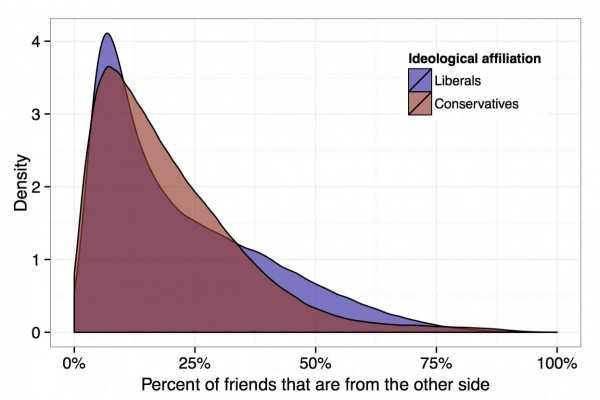

However, among those who report their ideology, on average, about 23 percent of their friends report an affiliation on the opposite side of the ideological spectrum. From the figure below, we can see that there is a wide range of network diversity. Half of users have between between 9 and 33 percent of friends from opposing ideologies, while 25 percent have less than 9 percent and the remaining 25 percent have more than 33 percent.

Percent of friends from opposing ideologies among liberals and conservatives.

Percent of friends from opposing ideologies among liberals and conservatives.

The flow of information on Facebook

The diversity of content people encounter depends not only on who their friends are, but also what information those friends share, and the interaction between people and Facebook’s News Feed. News Feed shows you all of the content shared by your friends, but the most relevant content is shown first. Exactly what stories people click on depends on how often they use Facebook, how far down they scroll in the News Feed, and the choices they make about what to read.

Illustration of how the exposure process consists of three phases: (1) the news your friends share (Potential from network), (2) ranking and the time that individuals take to scroll governs what they see in their News Feeds (Exposed), (3) clicking through to actual article (Selected).

Illustration of how the exposure process consists of three phases: (1) the news your friends share (Potential from network), (2) ranking and the time that individuals take to scroll governs what they see in their News Feeds (Exposed), (3) clicking through to actual article (Selected).

How much cross-cutting content people encounter depends on who their friends are and what information those friends share. If people were to acquire information from random others, approximately 45 percent of the content liberals would be exposed to would be cross cutting, compared to 40 percent for conservatives. Of course, individuals do not encounter information at random in offline environments nor on the Internet.

How much of the cross-cutting content shared by your friends appears in News Feed? People are eligible to see all of the content shared by their friends in News Feed, but since people don’t have enough time in the day to see everything, we sort the content to show people what is most relevant to them. We found that 23 percent of news shared by liberals’ friends is cross-cutting, whereas what is seen in the News Feed is 22 percent. This corresponds to a risk ratio of 8 percent, meaning that people were 8 percent less likely to see countervailing articles that have been shared by friends, compared to the likelihood of seeing ideologically consistent articles that have been shared by friends. On the other hand, 34 percent of the content shared by conservatives is ideologically cross-cutting, versus 33 percent actually seen in the News Feed, corresponding to a risk ratio of 5 percent.

How much cross-cutting content that appears in News Feed do people actually click on? While 22 percent of the content seen by liberals was cross-cutting, we found that 20 percent of the content they actually clicked on was cross-cutting (meaning people are 6 percent less likely to click on countervailing articles that appeared in their News Feed, compared to the likelihood of clicking on ideologically consistent articles that appeared in their News Feed). Conservatives saw 33 percent of cross-cutting content in News Feed but actually clicked on 29 percent (corresponding to a risk ratio of 17 percent).

The diversity of content (1) shared by random others (random), (2) shared by friends (potential from network), (3) actually appearing in peoples’ News Feeds (exposed), (4) clicked on (selected).

The diversity of content (1) shared by random others (random), (2) shared by friends (potential from network), (3) actually appearing in peoples’ News Feeds (exposed), (4) clicked on (selected).

When we look at people “on the margin” of encountering hard news on Facebook, we see more evidence of the important role individual choice plays. Take people whose friends shared at least one consistent and one cross-cutting story — 99 percent of them were exposed to at least one ideologically aligned item, and 96 percent encountered at least one ideologically cross-cutting item in News Feed. When we looked at who clicked through to hard news content, we found that 54 percent—more than half—clicked on ideologically cross-cutting content, although less than the 87 percent who clicked on ideologically aligned content.

Proportion of individuals with at least one cross-cutting and aligned story (1) shared by friends (potential), (2) actually appearing in peoples’ News Feeds (exposed) (3) clicked on (selected).*

Proportion of individuals with at least one cross-cutting and aligned story (1) shared by friends (potential), (2) actually appearing in peoples’ News Feeds (exposed) (3) clicked on (selected).*

Discussion

By showing that people are exposed to a substantial amount of content from friends with opposing viewpoints, our findings contrast concerns that people might “list and speak only to the like-minded” while online [2]. The composition of our social networks is the most important factor affecting the mix of content encountered on social media with individual choice also playing a large role. News Feed ranking has a smaller impact on the diversity of information we see from the other side.

We believe that this work is just the beginning of a long line of research into how people are exposed to and consume media online. For more information, see our paper, which is available on open access at ScienceExpress:

Exposure to Ideologically Diverse News and Opinion. E. Bakshy, S. Messing, L. Adamic. Science.

References

[1] Exposure to Ideologically Diverse News and Opinion. E. Bakshy, S. Messing, L. Adamic.Science, 2015.

[2] C. R. Sunstein, Republic.com 2.0 (Princeton University Press, 2007).

[3] E. Pariser, The Filter Bubble: What the Internet Is Hiding from You (Penguin Press, London, 2011).

[4] E. Bakshy, I. Rosenn, C. Marlow, L. Adamic, The Role of Social Networks in Information Diffusion, Proceedings of the 21st international conference on World Wide Web Pages. (2012).

[5] S. Messing, S. J. Westwood, Selective Exposure in the Age of Social Media: Endorsements Trump Partisan Source Affiliation when Selecting News Online,Communication Research (2012).

更多阅读: